MySQL database is one of the world’s most powerful open source databases because of its proven accomplishments, ease of use and trustworthiness. It has become a leading database choice for almost all web-based applications. Nowadays, database operations tend to be the major obstruction for several web applications. The database administrators are not solely required to worry about the performance issues. Being a programmer, you require to complete your part by organizing tables in the right way, writing good codes and optimized queries. In this article, we will be discussing some of the major MySQL optimization tricks for programmers.

Optimize Your Issues For Query Cache

Query caching comes enabled in most of the MySQL servers. It is considered as one of the most efficient methods of enhancing the performance that is managed by the database engine. When similar issue comes multifarious times, the result is then derived from the cache that is very fast. The major problem is that it is so simple and disguised from the programmer that it is mostly overlooked by you. Some tasks that you perform can hinder the query cache from performing its work.

1 2 3 4 5 6 | // query cache does NOT work $q = mysql_query("SELECT useremail FROM user WHERE signup_date >= CURDATE()"); // query cache works! $today = date("Y-m-d"); $q = mysql_query("SELECT useremail FROM user WHERE signup_date >= '$today'"); |

The main reason that does not work in the foremost line is because of the usage of CURDATE() function. This case implies to all non-deterministic features like NOW() and RAND(). As returning outcome of the function can be modified, MySQL plans to deactivate query caching for that specified query. All you require to do is to make an inclusion of additional line of PHP before the query for stopping this from happening.

EXPLAIN Your Selected Queries

Making use of the EXPLAIN keyword, you can get an idea as to what MySQL is doing to process your query. This can assist you in pointing out the bottlenecks and several other issues related to your query and table structures. The final output of an EXPLAIN query will showcase the indexes being used and how the table get sorted and scanned.

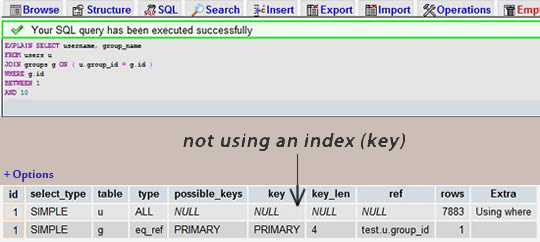

Now, take SELECT query and make addition of EXPLAIN keyword in front of it. Make use of phpmyadmin for this purpose that will present the result in proper table. For instance, let’s suppose you missed to add index to the column that you performed joins on:

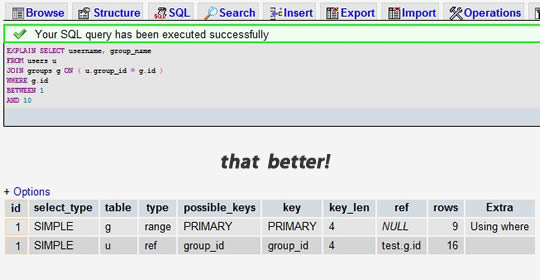

After making addition of index to the group_id field:

Rather than scanning 7883 rows, it will now only scan 9 and 16 rows from 2 tables. It is a thumb rule to multiply all the numeric under “rows” column, and then the performance of your query will be proportional to the resulting numeric.

Use LIMIT 1 For Obtaining An Exceptional Row

There are times when programmer is querying the tables and know in advance that he wants just one row. You might acquire a unique record or just check the presence of different records that fulfill your WHERE clause. Here, addition of LIMIT 1 to your query can enhance your performance. This way, database engine finishes scanning for records after getting 1 rather than going through the complete table or index.

1 2 3 4 5 6 7 8 9 10 11 12 13 | // do I have any user from Chicago? // what NOT to do: $q = mysql_query("SELECT * FROM user WHERE state = 'Chicago'"); if (mysql_num_rows($q) > 0) { // ... } // much better: $q = mysql_query("SELECT 1 FROM user WHERE state = 'Chicago' LIMIT 1"); if (mysql_num_rows($q) > 0) { // ... } |

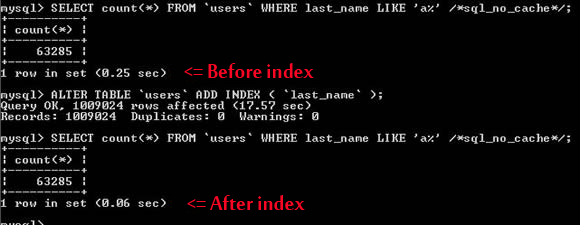

Index Search Fields

Indexes are not only meant for the unique keys or primary keys. If your table consists of any column that you will be searching for, then you should always index them.

It can be clearly seen that this rule also implies to a partial string search such as As you can observe, this rule also implies to a partial string of search like “last_name LIKE ‘a%'”. When you search right from the start of the string, MySQL is able to use the index on that column. It is vital for you to a know which kind of searches cannot utilize continuous indexes. For example, when you look for a word ( e.g. “WHERE post_content LIKE ‘%apple%'”), you will not get to see an advantage from a normal index. It will be advisable to use mysql fulltext search or creating your own indexing solution.

Index and Utilize identical Column Types for Joins

In case, your application consists of several JOIN queries, then ensure to index the columns you join on both tables. The effect can be seen as to how MySQL optimizes the connected operation from within.

Ensure to join such columns that are of same type. For example, if you connect an INT column, to a DECIMAL column from another table, then it won’t be possible for MySQL to use both the indexes. Also, the character encoding requires to be of the similar type for string type columns.

1 2 3 4 5 6 7 8 | // looking for companies in my state $q = mysql_query("SELECT companyname FROM users LEFT JOIN companies ON (users.state = companies.state) WHERE users.id = $userid"); // both state columns should be indexed // and they both should be the same type and character encoding // or MySQL might do full table scans |

Do Not Make ORDER BY RAND()

This is one of the major tricks that appears interesting at first and several rookie programmers get trapped in it. Once you begin to use this in your queries, you will not even know what incredible bottleneck you can design.

If you wish to get random rows as the outcome then there are better ways of completing it. Undoubtedly, it will require add on code but you need to protect a bottleneck that gets bad as your data grows. The major issue is that MySQL will need to perform RAND() operation that takes processing power for each single row in the table even before sorting it and providing you with only 1 row.

1 2 3 4 5 6 7 8 9 10 | // what NOT to do: $q = mysql_query("SELECT username FROM user ORDER BY RAND() LIMIT 1"); // much better: $q = mysql_query("SELECT count(*) FROM user"); $qry = mysql_fetch_row($q); $rand = mt_rand(0,$qry[0] - 1); $q = mysql_query("SELECT username FROM user LIMIT $rand, 1"); |

So, just select a random number less than the number of outputs and make use of it as offset in the LIMIT clause.

Always Come With an id Field

Every table comes with an id column which is AUTO_INCREMENT, PRIMARY KEY and among the flavors of INT. Also, it may be unsigned as the value cannot be negative.

Even if you are laced with users table that comes with an exceptional user name field, then don’t make it your fundamental key. VARCHAR fields work slower as fundamental keys.

You will also get behind the scenes operations conducted by the MySQL engine that makes use of the fundamental key field inside that becomes vital as the database setup gets complicated.

Abstain SELECT

As more data can be studied from the tables, the query will become slower. It enhances the time that it takes for disk operations. In addition to this, the database server can also be separated from the web server but you will have to face bigger network delays because of the data being transmitted between the servers. It is better to specify which columns you require when you are performing your SELECT’s.

1 2 3 4 5 6 7 8 9 10 11 | // not preferred $q = mysql_query("SELECT * FROM user WHERE user_id = 1"); $qry = mysql_fetch_assoc($q); echo "Welcome {$qry['username']}"; // better: $q = mysql_query("SELECT username FROM user WHERE user_id = 1"); $qry = mysql_fetch_assoc($q); echo "Welcome {$qry['username']}"; // the differences are more significant with bigger result sets |

Utilize ENUM over VARCHAR

Steady and compact in nature, ENUM type columns are internally stored like TINYINT but they do consist of and display string values. This feature makes them an apt candidate for specific fields. If there are fields that contain few kinds of values then utilize ENUM instead of VARCHAR. For instance, there can be a column named “status”, and consists of only values like “active”, “pending”, and many more.

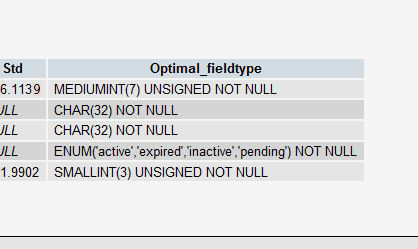

There is also a way to get “suggestion” from MySQL on how to reorganize the table. When you are provided with VARCHAR field, it can advise you to modify the column type to ENUM instead. This can be done using PROCEDURE ANALYSE() call that brings us to:

Get Advice with PROCEDURE ANALYSE()

PROCEDURE ANALYSE() enables MySQL to identify the columns structure and the original data in the table in order to provide some useful suggestions. It can only be beneficial if there is original data in the tables as they play a major role in decision making.

For instance, if you have developed an INT field for your fundamental key and do not have many rows, then it may advice you to make use of MEDIUMINT. And in case you are using VARCHAR field, you will get a suggestion to transform it to ENUM if only few exceptional values are left.

This feature can also be run by clicking “Propose table structure” link in phpmyadmin, among your table views.

Just remember that these are mere suggestions and in case your table grows bigger, these suggestions may not be right. The final call will be taken by you.

Utilize Not Null

Till the time you do not have any specified reason for using a NULL value, ensure to set your columns as NOT NULL. Firstly, make yourself clear whether there is any major difference between having a NULL value or an empty string value. If there is no specified reason for having both, then you will not require a NULL field.

NULL columns need add on space and can easily add complexity to your correlated statements so just ignore them if you can. However, there might be people who need specified reasons for having NULL values that is not a bad thing.

Prepared Statements

There are multifarious advantages of using prepared statements for both security and performance reasons. Prepared Statements filter the default variables bind to them which prevents your application from SQL injection attacks. You can obviously filter your variables manually but those methods are more liable to human error and carelessness by the programmer. This is not considered as a major issue while using distinct kind of framework or ORM.

Earlier, there were many programmers who overlooked prepared statement for one specified reason. They were not cached by MySQL query cache. But, with version 5.1, query caching was supported too. In order to utilize prepared statements in PHP, you need to check out MySQL extension or utilize database abstraction layer like PDO.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 | // create a prepared statement if ($stmnt = $mysqli->prepare("SELECT username FROM user WHERE country=?")) { // bind parameters $stmnt->bind_param("s", $country); // execute $stmnt->execute(); // bind result variables $stmnt->bind_result($username); // fetch value $stmnt->fetch(); printf("%s is from %s\n", $username, $country); $stmnt->close(); } |

Unbuffered Queries

Generally, when a query is performed from a script, it will halt for the execution of that query to complete before it can start. You can make changes in that by making use of unbuffered queries.

There is a detailed explanation in PHP docs for the mysql_unbuffered_query() function

“mysql_unbuffered_query() sends the SQL query query to MySQL without automatically fetching and buffering the result rows as mysql_query() does. This saves a considerable amount of memory with SQL queries that produce large result sets, and you can start working on the result set immediately after the first row has been retrieved as you don’t have to wait until the complete SQL query has been performed.”

However, Unbuffered Queries come with certain limitations. You will either need to study all the rows or call mysql_free_result() before you get the chance to perform another query. Apart from this, you are not even permitted to use mysql_num_rows() or mysql_data_seek() on the final result set.

Store IP Addresses as UNSIGNED INT

There are several programmers who create a VARCHAR(15) field without even realizing that they can easily make use of the store IP addresses as integer values. With the assistance of an INT, you need to go down to only 4 bytes of space having a permanent size field. All you need to do is to ensure that your column is an UNSIGNED INT because IP Addresses utilize the wide range of a 32 bit unsigned integer.

In your queries, you can make use of INET_ATON() for converting an IP to integer and INET_NTOA() for vice versa. There are several comparable function in PHP like ip2long() and long2ip().

There are also similar functions in PHP called ip2long() and long2ip().

1 | $q = "UPDATE users SET ip = INET_ATON('{$_SERVER['REMOTE_ADDR']}') WHERE user_id = $user_id"; |

Fixed-length (Static) Tables are Steady

When every column in a table is “fixed-length”, then the table is also termed as “static” or fixed-length”. For instance, TEXT, VARCHAR or BLOB are some of the column types with no fixed length. If you make use of any one of these types of columns, then the table stops to be fixed-length and has to be managed separately by MySQL engine.

Fixed-length tables have the capability to enhance the performance as it is steady for MySQL engine to look for records. In case it wishes to view any specified row in a table, then it can easily calculate the position of it. If the size of the row is not set, then it needs to consult the fundamental index all the time it needs to view it.

They are simpler to cache and recreate after a crash but can occupy more space. For example, if you transform a VARCHAR(20) field to a CHAR (20) field, it will cover 20 bytes of space irrespective of the fact what is it in.

By making use of “Vertical Partitioning” techniques, you can easily remove the variable-length columns to an independent table that brings us to

Vertical Partitioning

Vertical Partitioning is basically the act of dividing the table structure in the upright manner for optimization reasons.

For instance, you may have a users table that consists of home addresses which do not get studied often. You can select to divide the table and save the address info on a new table. This will shrink the main users table in size. It is a well-known fact that smaller tables perform faster.

But you also need to ensure that you constantly do not require to join these 2 tables after the partition or you may suffer in performance decline.

Divide DELETE or INSERT Queries

If there is a requirement of performing a big DELETE or INSERT query on a live website then take care not to disturb the web traffic. When such a big query is performed, then it can lock your tables and your web application to a halt position.

Apache runs several parallel processes/threads. Thus, it works efficiently when scripts finish executing and the servers do not face many open connections at once that exhaust resources.

If you land up locking your tables for a long duration like 30 seconds or more on a huge traffic site, then you will create a process and query pileup that may take huge time to clear or even crash the web server. If there is any kind of maintenance script that can be needed to delete huge numbers of rows, then just utilize LIMIT clause for doing it in smaller batches to overcome such issue.

1 2 3 4 5 6 7 8 9 | while (1) { mysql_query("DELETE FROM logs WHERE log_date <= '2016-10-01' LIMIT 10000"); if (mysql_affected_rows() == 0) { // done deleting break; } // you can even pause a bit usleep(50000); } |

Smaller Columns Are Steady

When it comes to database engines, disk is considered as the most important bottleneck. In terms of performance, it is suggested to keep things smaller and compact for minimizing the amount of disk transfer. MySQL docs come with a big list of Storage Requirements for all types of data.

If the table is supposed to have few rows, then you don’t need to make the fundamental key an INT in place of SMALLINT, MEDIUMINT or in some situation TINYINT. If you don’t require time component, then make use of DATE instead of DATETIME.

Select The Right Storage Engine

The major storage engines in MySQL are InnoDB and MyISAM. Each comes with their own pros and cons. MyISAM is best for read-heavy applications but it does not scale good when there are several writes. Even if one field of a row is getting updated, the complete table gets locked and there is no process that can even read it until the query is completed. MyISAM is very quick at calculating SELECT COUNT(*) types of queries.

InnoDB tends to be a complex storage engine and can be slower than MyISAM for several small applications. But, this storage engine totally supports row-based locking, that scales better. In addition this, it also supports some latest features like transactions.

- MyISAM Storage Engine

- InnoDB Storage Engine

Make Use Of An Object Relational Mapper

Use ORM to gain specific performance benefits. Whatever an Object Relational Manager can do can be coded manually also. But, this also indicates too much extra work and top level of expertise. ORM’s are ultimate option for “Lazy Loading” which simply indicates that they can derive values only for what is needed. But you require to be utmost careful with them or you will land up creating several mini-queries that will surely minimize the performance.

ORM’s also has the power to batch your queries into transactions that will operate quicker than sending individual queries to the database.

Take Care With Persistent Connections

Persistent Connections are basically meant for minimizing the overhead of reconstructing connections to MySQL. When you develop a persistent connection, it will remain open even after the script stops running. As Apache reutilizes its child processes, so whenever the process runs for a new script, it will make use of the same MySQL connection again and again.

Select Fields That You Require

Extra fields generally boost grain of the data returned and hence result in additional data being returned to SQL client. Apart from this:

- At times, query may run at a fast pace but your issue can be related to network because huge amount of precise data is sent to reporting server across the network.

- While using analytical and reporting applications, there can be moderate report performance at times because the reporting tool needs to do aggregation as the data is received in concise form.

- When you use column-oriented DBMS, then only selected columns can be read from the disk. So, if you include less column in your query, then there will be minimal IO overhead.

Eliminate Outer Joins

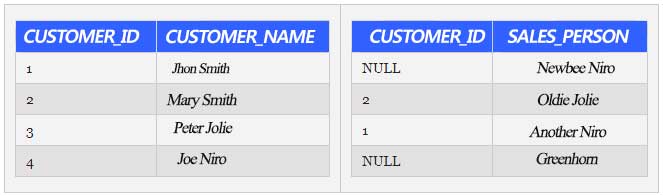

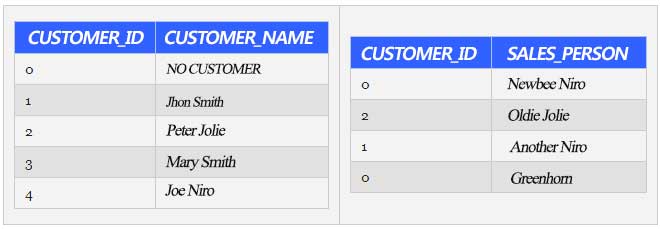

It all depends on how much impact you have in the changing table content. One of the key solutions is to eliminate the OUTER JOINS by placing placeholder rows in all the tables. For instance, you get the below given tables with an OUTER JOIN defined ensuring complete data is returned.

All you need to do is to make an addition of a placeholder row in the customer table and revise all the NULL values to placeholder key in the sales table.

You have not only eliminated the requirement for OUTER JOIN but you have also standardized how sales person are represented without any customers. Other developers are not required to write statements like ISNULL(customer_id, “No customer yet”).

You have not only eliminated the requirement for OUTER JOIN but you have also standardized how sales person are represented without any customers. Other developers are not required to write statements like ISNULL(customer_id, “No customer yet”).

Abolish Unnecessary Tables

The logic behind abolishing the unnecessary tables are same as that for removing fields not required in select statement. Writing SQL statements is basically a method which takes numerous iterations when you write and test SQL statements. At the time of development, you can also add tables to the query that will not have any effect on the data returned from the SQL code. Once the SQL is found right, many people do not even review their script and eliminate those tables that are of no use in final data returned. By eliminating JOINS from these unnecessary tables, you need to minimize the amount of processing database has to do.

Dismiss Calculated Fields in JOIN and WHERE Clauses

This method can be done by developing a field with the calculated values utilized in the join on the table. Given below is the SQL statement:

1 2 | FROM sales a JOIN budget b ON ((year(a.sale_date)* 100) + month(a.sale_date)) = b.budget_year_month |

You can easily enhance the performance by making addition of a column with month and year in the sales table. You can see the updated SQL statement below:

1 2 | SELECT * FROM PRODUCTSFROM sales a JOIN budget b ON a.sale_year_month = b.budget_year_month |

Accomplish Better Query Performance With These Quick Optimization Tips

- Make use of EXISTS rather than IN for checking existence of data.

- Refrain from having clause as it is used only if you wish to further filter the outcomes of aggregations.

- Unused indexes to be left out.

- Utilize TABLELOCKX when inserting into table and TABLOCK at the time of merging.

- In order to neglect deadlock condition, utilize SET NOCOUNT ON and use TRY- CATCH.

- Make use of Stored Procedure for commonly used data and complicated queries.

- If possible, try to avoid both nchar and nvarchar as these data types consume just the double memory as that of char and varchar.

- As Multi-statement Table Valued Functions are costlier than inline TVFs, try to avoid using it.

- Utilize Table variable instead of Temp table mainly because Temp tables need interaction with TempDb database that is a time-consuming task.

- Avoid usage of Non-correlated Scalar Sub Query. Utilize this query as a separate query rather than section of the primary query and store result in a variable that can be either referred to in the fundamental query or in the later part.

- Utilize WITH (NOLOCK) at the time of querying the data from any random table.

- Neglect prefix “sp_” along with the user defined stored procedure name as SQL server initially look for user defined procedure in the major database and then in recent session database.

- Before using the SQL objects name, utilize Schema name.

- With the aim to limit the size of result tables, use WHERE expressions which are developed with joins.

- Incorporate UNION ALL instead of UNION if you can.

- Make use of JOINS rather than sub-queries.

- Keep your transaction shorter as it locks the processing tables data and results into deadlocks.

- Try to neglect cursor as it is slow when it comes to performing.

- It is advisable to create indexes on columns having integer values rather than characters. Integer values come with less overhead as compared to character values.

- Selective columns should be kept in left side in the key of a non-clustered index.

Ending Notes

So, it is quite clear that SQl query can be written in several ways but it is advisable to follow the best practices in order to achieve the desired result. I hope that this article turns out to be helpful for you and explains you the entire process of MySQL query optimization. If you feel that we have missed out any point, then feel free to share your views in the comment section below.